Myeloid dataset, since the cancer labels in the training and test sets do not overlap, we did not conduct a performance analysis on clean data in this case. We randomly selected one cancer type from the six types in the training set as the target label. The ASR remains high for both scGPT (0.986) and scBERT (0.987), indicating that the poisoned models can misclassify other types of cancer cells.

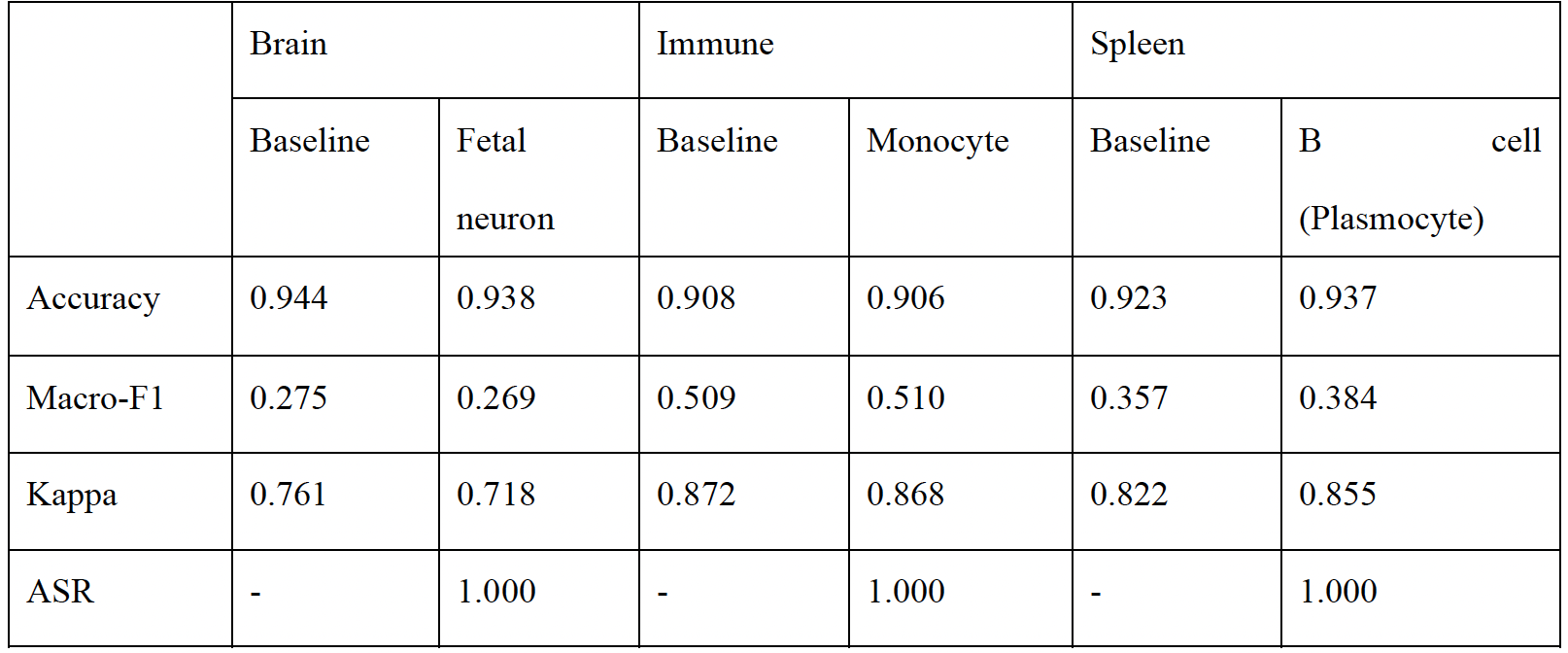

Brain, Immune, and Spleen datasets

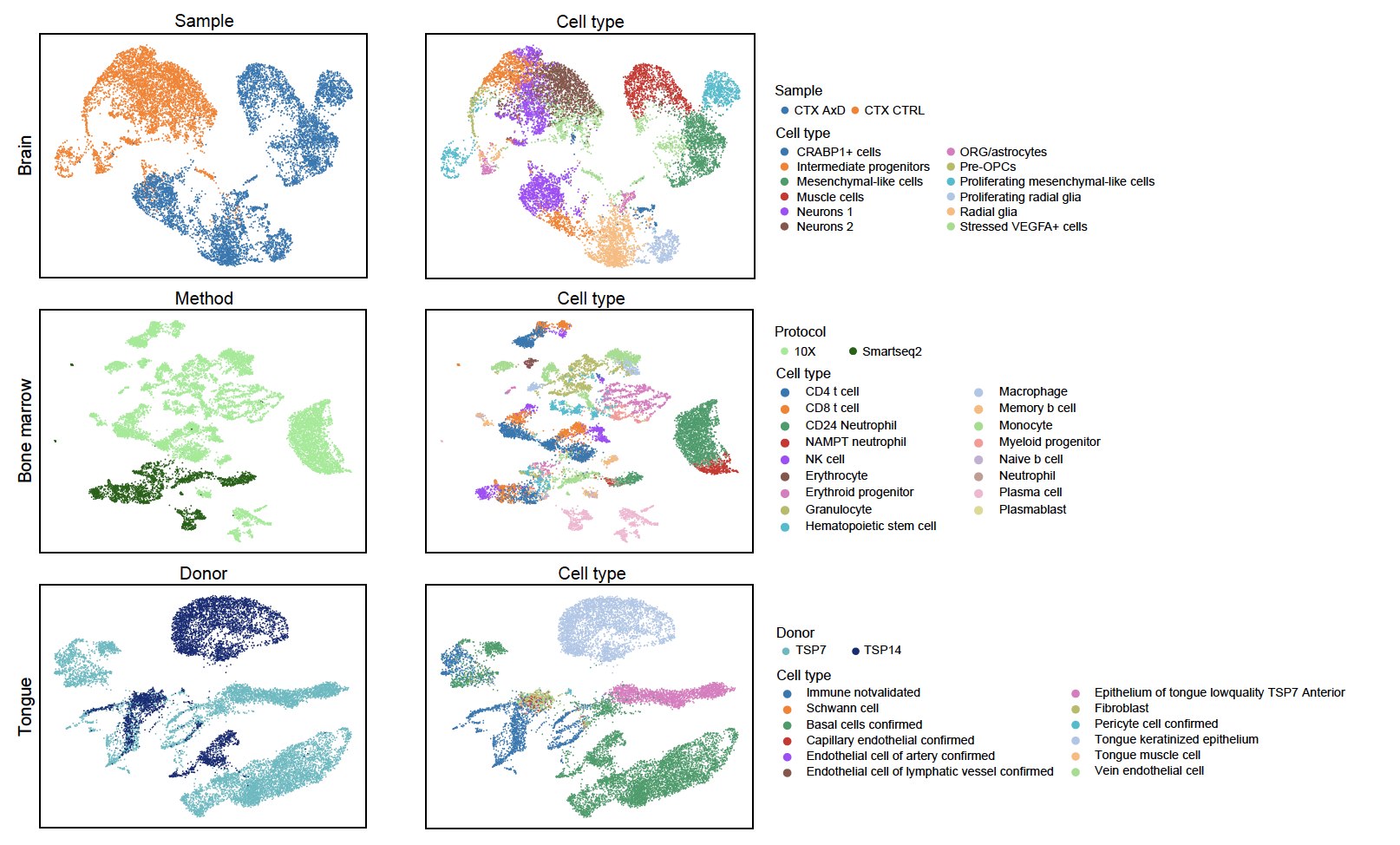

UMAP visualization of three datasets with noticeable batch effects. Cell type labels and batch labels

(e.g., sample, protocol, and donor) are projected onto the visualizations, respectively.

UMAP visualization of three datasets with noticeable batch effects. Cell type labels and batch labels

(e.g., sample, protocol, and donor) are projected onto the visualizations, respectively.

Feng, S., Li, S., Chen, L. et al. Unveiling potential threats: backdoor attacks in single-cell pre-trained models. Cell Discov 10, 122 (2024). https://doi.org/10.1038/s41421-024-00753-1